I figure I should post about my other interests from time to time, and for all of you just here for the faggotry pretty fuckin bummer for you that those happen to include math. Anyway, a day or two in one of my classes the prof. pointed out that while sure you can see a nuron in a nerual networks as an affine function on each of the previous layer's output, followed by our RELU, it is also the dot product of the weights vector going into that neron and the previous layers values. This is also called the cosine similarity. Since if you think of the input and weights matrix as two hyperpoints, this is just the value of the cosine between their angles. like a normal ass dot product. But then we add our bias (the sum of all + terms in our affine functions) and RELU the bitch, which together is just checking if the cosine is above a certain limit. If it is, return it, if not 0.

So, the cutoff for the RELU can be interpreted as a sphere sphere with a learned in the high dimension space surrounding the points of our weights vector! so all the nn is doing is checking if we are outside this area, and if we are not saying "heres how far" and if so saying "basically the same" And then the composition of all of these checks is just a whole bunch of cuts on input space, hence the spline representation of nns and the only reason you cant interpret nns is that you cannot actually see imagespace or whatever

How fucked up is that!

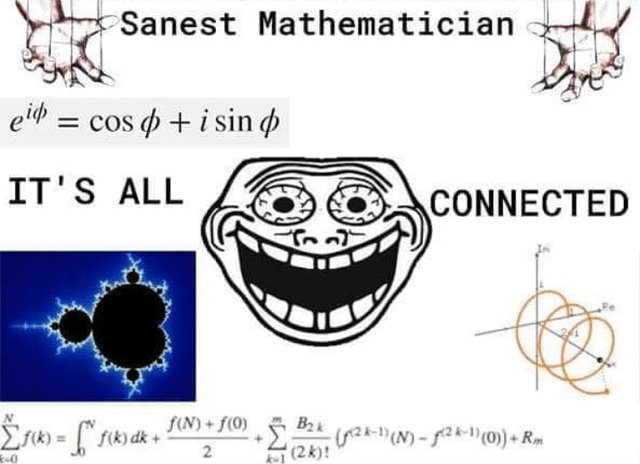

Every day I understand this image more: